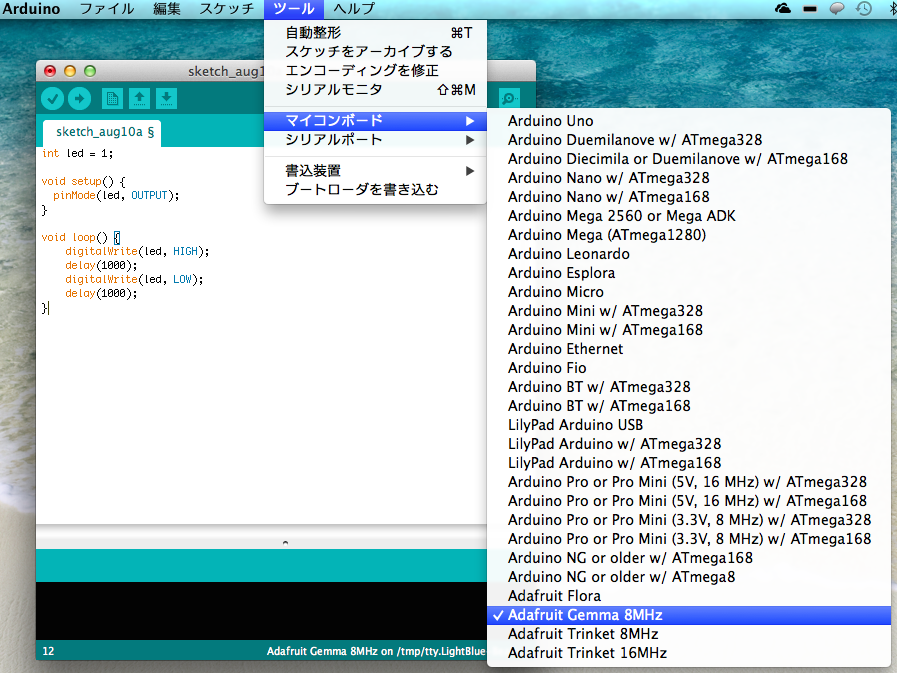

環境は以下

– Windows 8.0

– VS 2012

– openFrameworks 0.8.3(x86)

まずMSのサイトからKinect SDKをダウンロードしてきてインストール。

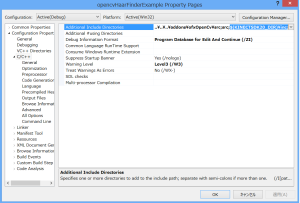

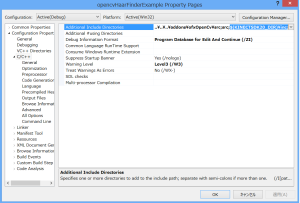

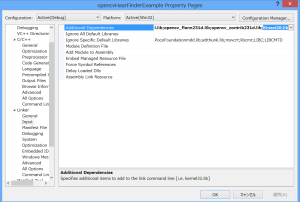

1. Includeに$(KINECTSDK20_DIR)¥inc;を追加

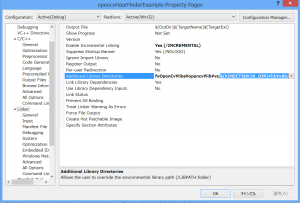

2. Libに$(KINECTSDK20_DIR)¥lib¥x86;を追加

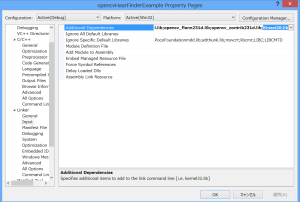

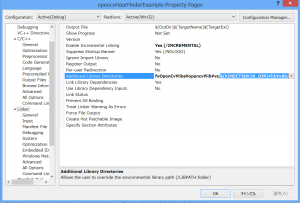

3. DependenciesにKinect20.libを追加

4. コードを書く

Kinect for Windows v2 Developer Preview入門 ― C++プログラマー向け連載をほぼコピってof向けに整形しただけですが…

ofApp.h

#pragma once

#include "ofMain.h"

#include "ofxOpenCv.h"

#include <kinect.h>

#include <Windows.h>

using namespace cv;

template

inline void SafeRelease( Interface *& pInterfaceToRelease )

{

if( pInterfaceToRelease != NULL ){

pInterfaceToRelease->Release();

pInterfaceToRelease = NULL;

}

}

class ofApp : public ofBaseApp{

public:

void setup();

void update();

void draw();

bool initializeKinectv2();

IKinectSensor* pSensor;

IDepthFrameSource* pDepthSource;

IColorFrameSource* pColorSource;

IBodyFrameSource* pBodySource;

IColorFrameReader* pColorReader;

IBodyFrameReader* pBodyReader;

IDepthFrameReader* pDepthReader;

IFrameDescription* pDepthDescription;

IFrameDescription* pColorDescription;

ICoordinateMapper* pCoordinateMapper;

// buffer

ofxCvGrayscaleImage grayscaleImage;

ofxCvColorImage colorscaleImage;

//

int depthWidth, depthHeight;

unsigned int depthBufferSize;

int colorWidth, colorHeight;

unsigned int colorBufferSize;

};

ofApp.cpp

#include "ofApp.h"

//--------------------------------------------------------------

void ofApp::setup(){

ofSetVerticalSync(true);

ofBackground(255, 255, 255);

// ofSetFullscreen(true);

ofEnableAlphaBlending();

ofEnableSmoothing();

ofSetBackgroundAuto(true);

if (!this->initializeKinectv2())

exit();

}

bool ofApp::initializeKinectv2() {

HRESULT hResult = S_OK;

// Open Kinect

hResult = GetDefaultKinectSensor( &this->pSensor );

if( FAILED( hResult ) ){

std::cerr << "Error : GetDefaultKinectSensor" << std::endl;

return false;

}

hResult = this->pSensor->Open( );

if( FAILED( hResult ) ){

std::cerr << "Error : IKinectSensor::Open()" << std::endl;

return false;

}

// Open Source

hResult = this->pSensor->get_ColorFrameSource( &this->pColorSource );

if( FAILED( hResult ) ){

std::cerr << "Error : IKinectSensor::get_ColorFrameSource()" << std::endl;

return false;

}

hResult = this->pSensor->get_BodyFrameSource( &this->pBodySource );

if( FAILED( hResult ) ){

std::cerr << "Error : IKinectSensor::get_BodyFrameSource()" << std::endl;

return false;

}

hResult = pSensor->get_DepthFrameSource( &this->pDepthSource );

if( FAILED( hResult ) ){

std::cerr << "Error : IKinectSensor::get_DepthFrameSource()" << std::endl;

return false;

}

// Open Reader

hResult = this->pColorSource->OpenReader( &this->pColorReader );

if( FAILED( hResult ) ){

std::cerr << "Error : IColorFrameSource::OpenReader()" << std::endl;

return false;

}

hResult = this->pBodySource->OpenReader( &this->pBodyReader );

if( FAILED( hResult ) ){

std::cerr << "Error : IBodyFrameSource::OpenReader()" << std::endl;

return false;

}

hResult = this->pDepthSource->OpenReader( &this->pDepthReader );

if( FAILED( hResult ) ){

std::cerr << "Error : IDepthFrameSource::OpenReader()" << std::endl;

return false;

}

// get descriptions

hResult = pDepthSource->get_FrameDescription( &this->pDepthDescription );

if( FAILED( hResult ) ){

std::cerr << "Error : IDepthFrameSource::get_FrameDescription()" << std::endl;

return false;

}

hResult = pColorSource->get_FrameDescription( &this->pColorDescription );

if( FAILED( hResult ) ){

std::cerr << "Error : IColorFrameSource::get_FrameDescription()" << std::endl;

return false;

}

// get coordinate mapper

hResult = this->pSensor->get_CoordinateMapper( &this->pCoordinateMapper );

if( FAILED( hResult ) ){

std::cerr << "Error : IKinectSensor::get_CoordinateMapper()" << std::endl;

return false;

}

this->pDepthDescription->get_Width( &depthWidth ); // 512

this->pDepthDescription->get_Height( &depthHeight ); // 424

this->depthBufferSize = depthWidth * depthHeight * sizeof( unsigned short );

this->pColorDescription->get_Width( &colorWidth );

this->pColorDescription->get_Height( &colorHeight );

this->colorBufferSize = colorWidth * colorHeight * 4 * sizeof( unsigned char );

this->grayscaleImage.allocate(depthHeight, depthWidth);

this->colorscaleImage.allocate(colorHeight, colorWidth);

}

//--------------------------------------------------------------

void ofApp::update(){

// get depth frame

Mat bufferMat( depthHeight, depthWidth, CV_16SC1 );

Mat depthMat(depthHeight, depthWidth, CV_8UC1 );

cv::Mat colorBufferMat( colorHeight, colorWidth, CV_8UC4 );

cv::Mat colorMat( colorHeight, colorWidth, CV_8UC4 );

// Frame

IDepthFrame* pDepthFrame = nullptr;

HRESULT hResult = pDepthReader->AcquireLatestFrame( &pDepthFrame );

if(SUCCEEDED( hResult )){

hResult = pDepthFrame->AccessUnderlyingBuffer( &depthBufferSize, reinterpret_cast<UINT16**>( &bufferMat.data ) );

if( SUCCEEDED( hResult ) ){

bufferMat.convertTo(depthMat, CV_8U, -255.0f / 4500.0f, 255.0f);

grayscaleImage.setFromPixels(depthMat.data, depthWidth, depthHeight);

}

}

SafeRelease( pDepthFrame );

// get color frame

cv::Vec3b color[6];

color[0] = cv::Vec3b( 255, 0, 0 );

color[1] = cv::Vec3b( 0, 255, 0 );

color[2] = cv::Vec3b( 0, 0, 255 );

color[3] = cv::Vec3b( 255, 255, 0 );

color[4] = cv::Vec3b( 255, 0, 255 );

color[5] = cv::Vec3b( 0, 255, 255 );

IColorFrame* pColorFrame = nullptr;

hResult = pColorReader->AcquireLatestFrame( &pColorFrame );

if( SUCCEEDED( hResult ) ){

hResult = pColorFrame->CopyConvertedFrameDataToArray( colorBufferSize, reinterpret_cast<BYTE*>( colorBufferMat.data ), ColorImageFormat_Bgra );

/*

if( SUCCEEDED( hResult ) ){

cvtColor(colorBufferMat, colorMat, CV_BGR2RGB);

colorscaleImage.setFromPixels(colorMat.data, colorWidth, colorHeight);

}

*/

}

SafeRelease( pColorFrame );

// get body frame

IBodyFrame* pBodyFrame = nullptr;

hResult = pBodyReader->AcquireLatestFrame( &pBodyFrame );

if( SUCCEEDED( hResult ) ){

IBody* pBody[BODY_COUNT] = { 0 };

hResult = pBodyFrame->GetAndRefreshBodyData( BODY_COUNT, pBody );

if( SUCCEEDED( hResult ) ){

for( int count = 0; count < BODY_COUNT; count++ ){

BOOLEAN bTracked = false;

hResult = pBody[count]->get_IsTracked( &bTracked );

if( SUCCEEDED( hResult ) && bTracked ){

Joint joint[JointType::JointType_Count];

hResult = pBody[ count ]->GetJoints( JointType::JointType_Count, joint );

if( SUCCEEDED( hResult ) ){

// Left Hand State

HandState leftHandState = HandState::HandState_Unknown;

hResult = pBody[count]->get_HandLeftState( &leftHandState );

if( SUCCEEDED( hResult ) ){

ColorSpacePoint colorSpacePoint = { 0 };

hResult = pCoordinateMapper->MapCameraPointToColorSpace( joint[JointType::JointType_HandLeft].Position, &colorSpacePoint );

if( SUCCEEDED( hResult ) ){

int x = static_cast( colorSpacePoint.X );

int y = static_cast( colorSpacePoint.Y );

if( ( x >= 0 ) && ( x < colorWidth ) && ( y >= 0 ) && ( y < colorHeight ) ){ if( leftHandState == HandState::HandState_Open ){ cv::circle( colorBufferMat, cv::Point( x, y ), 75, cv::Scalar( 0, 128, 0 ), 5, CV_AA );

} else if( leftHandState == HandState::HandState_Closed ){

cv::circle( colorBufferMat, cv::Point( x, y ), 75, cv::Scalar( 0, 0, 128 ), 5, CV_AA );

} else if( leftHandState == HandState::HandState_Lasso ) {

cv::circle( colorBufferMat, cv::Point( x, y ), 75, cv::Scalar( 128, 128, 0 ), 5, CV_AA );

}

}

}

}

// Right Hand State

HandState rightHandState = HandState::HandState_Unknown;

hResult = pBody[count]->get_HandRightState( &rightHandState );

if( SUCCEEDED( hResult ) ){

ColorSpacePoint colorSpacePoint = { 0 };

hResult = pCoordinateMapper->MapCameraPointToColorSpace( joint[JointType::JointType_HandRight].Position, &colorSpacePoint );

if( SUCCEEDED( hResult ) ){

int x = static_cast( colorSpacePoint.X );

int y = static_cast( colorSpacePoint.Y );

if( ( x >= 0 ) && ( x < colorWidth ) && ( y >= 0 ) && ( y < colorHeight ) ){

if( rightHandState == HandState::HandState_Open ){

cv::circle( colorBufferMat, cv::Point( x, y ), 75, cv::Scalar( 0, 128, 0 ), 5, CV_AA );

}

else if( rightHandState == HandState::HandState_Closed ){

cv::circle( colorBufferMat, cv::Point( x, y ), 75, cv::Scalar( 0, 0, 128 ), 5, CV_AA );

}

else if( rightHandState == HandState::HandState_Lasso ){

cv::circle( colorBufferMat, cv::Point( x, y ), 75, cv::Scalar( 128, 128, 0 ), 5, CV_AA );

}

}

}

}

// Joint

for( int type = 0; type < JointType::JointType_Count; type++ ){

ColorSpacePoint colorSpacePoint = { 0 };

pCoordinateMapper->MapCameraPointToColorSpace( joint[type].Position, &colorSpacePoint );

int x = static_cast( colorSpacePoint.X );

int y = static_cast( colorSpacePoint.Y );

if( ( x >= 0 ) && ( x < colorWidth ) && ( y >= 0 ) && ( y < colorHeight ) ){

cv::circle( colorBufferMat, cv::Point( x, y ), 5, static_cast< cv::Scalar >( color[count] ), -1, CV_AA );

}

}

}

}

}

cvtColor(colorBufferMat, colorMat, CV_BGR2RGB);

colorscaleImage.setFromPixels(colorMat.data, colorWidth, colorHeight);

}

}

SafeRelease( pBodyFrame );

}

//--------------------------------------------------------------

void ofApp::draw(){

colorscaleImage.draw(0, 0);

grayscaleImage.draw(0, 0);

}

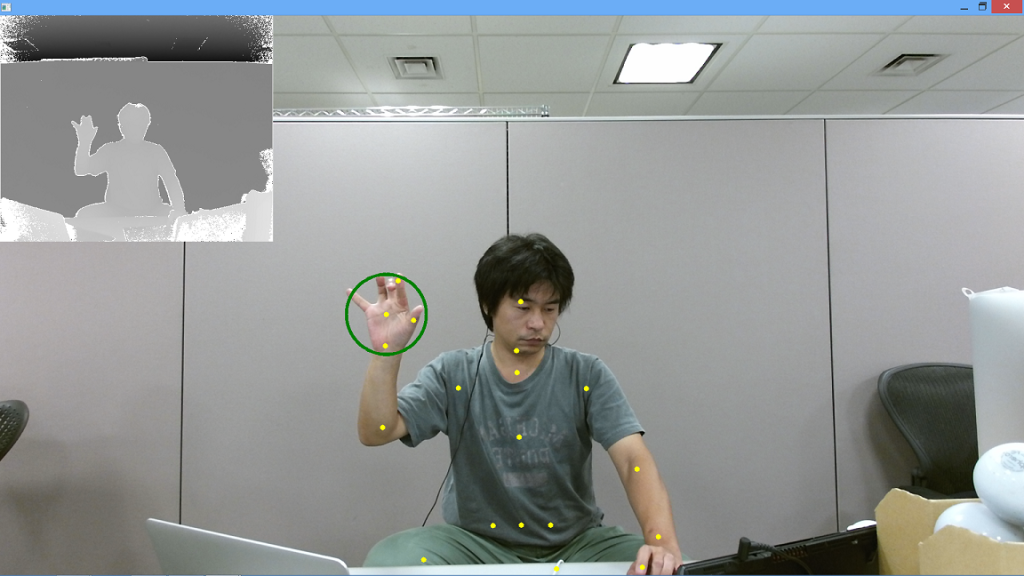

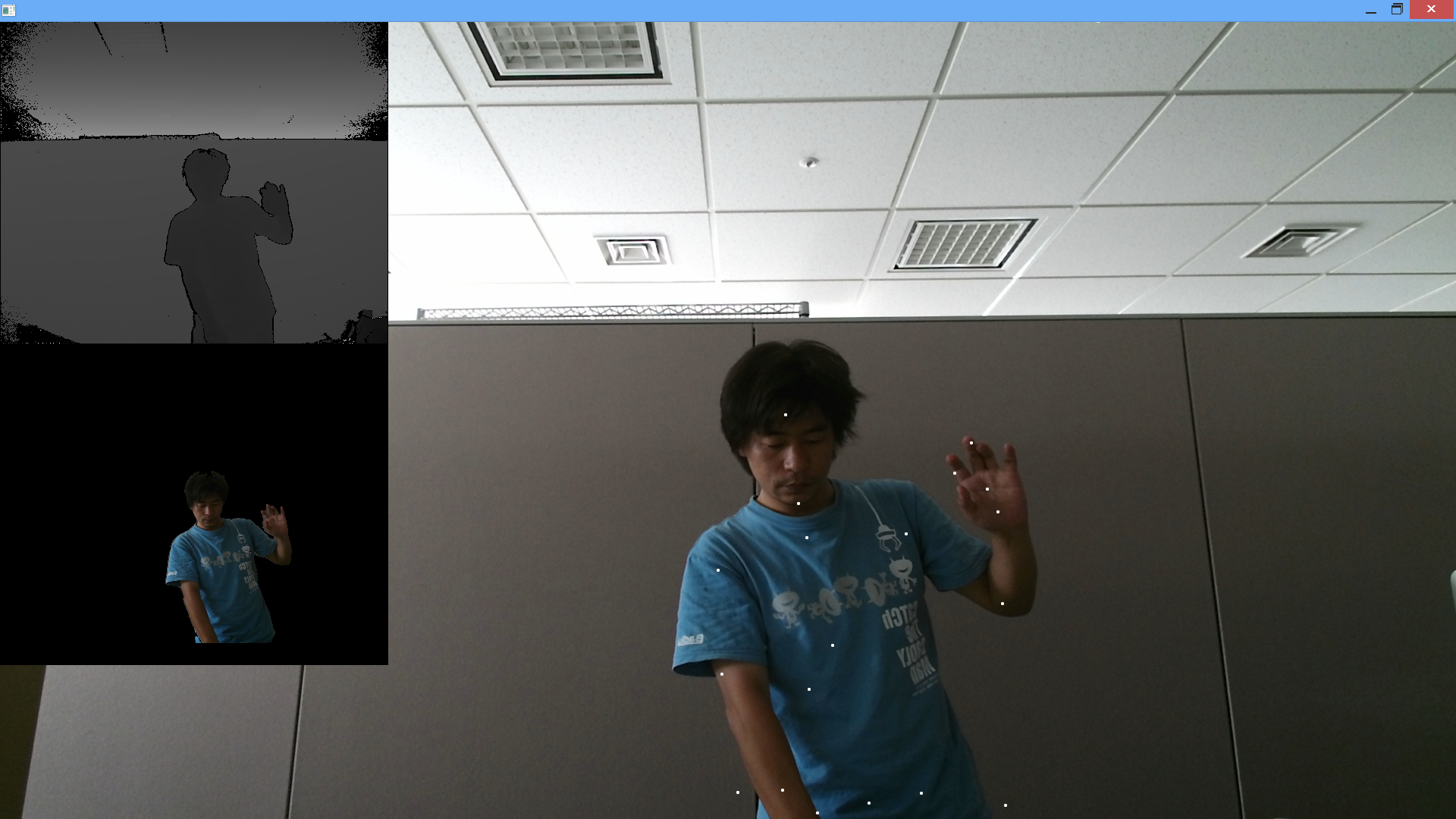

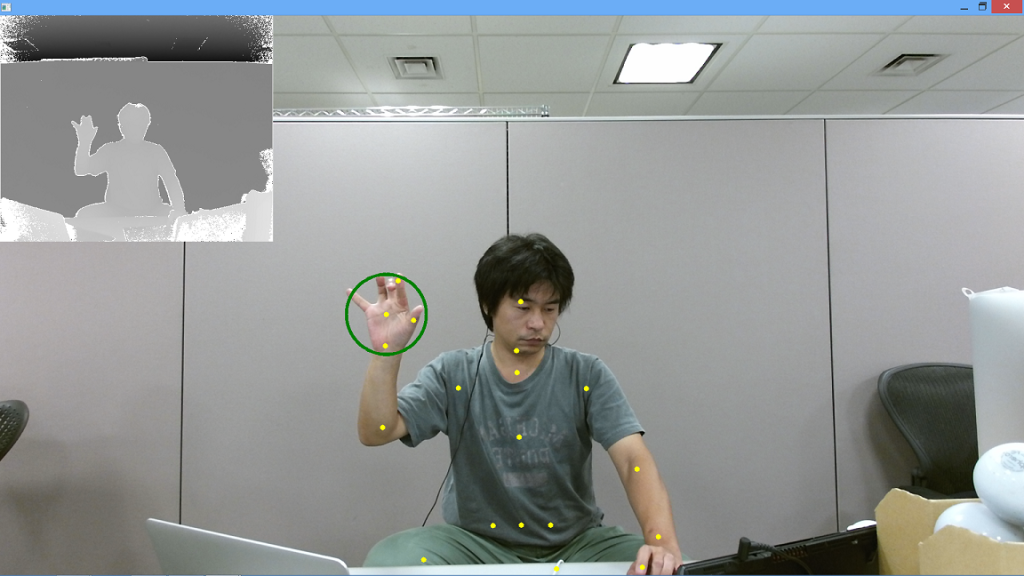

5. 実行